Google announced that it will roll out some new tools for DoubleClick Ad Exchange buyers. These features, the company says, will help buyers buy quality inventory and check their campaigns.

The DoubleClick Ad Exchange was launched in September as a real-time marketplace where online publishers and ad networks/agencies can buy and sell ad space for prices set in a real-time auction.

One new feature is called "Site Packs" which the company describes as "manually crafted collections of like sites based on DoubleClick Ad Planner and internal classifications, vetted for quality." Google is also making changes to its Real-time Bidder. "The biggest change here is for Ad Exchange clients who work with DSPs," says DoubleClick Ad Exchange Product Manager, Scott Spencer in an interview with AdExchanger.com (reposted to Google's DoubleClick Advertiser Blog). "Historically, Ad Exchange buyers were hidden from publishers behind their DSP. By introducing a way to segment out each individual client's ad calls, inventory can be sent exclusively to an Ad Exchange buyer even when that buyer uses a DSP. It increases transparency for publishers and potentially give buyers more access to the highest quality inventory, like 'exclusive ad slots' – high quality inventory offered to only a few, select buyers as determined by the publisher."

Google is also making changes to its Real-time Bidder. "The biggest change here is for Ad Exchange clients who work with DSPs," says DoubleClick Ad Exchange Product Manager, Scott Spencer in an interview with AdExchanger.com (reposted to Google's DoubleClick Advertiser Blog). "Historically, Ad Exchange buyers were hidden from publishers behind their DSP. By introducing a way to segment out each individual client's ad calls, inventory can be sent exclusively to an Ad Exchange buyer even when that buyer uses a DSP. It increases transparency for publishers and potentially give buyers more access to the highest quality inventory, like 'exclusive ad slots' – high quality inventory offered to only a few, select buyers as determined by the publisher."

Google will also roll out a beta of a feature called "Data Transfer", which is a report of all transactions bought/sold by clients on the Ad Exchange.

Rumors surfaced recently that Google was buying Like.com. Those rumors have now been confirmed, as Like.com has announced the news on their site. Founder and CEO Munjal Shah writes:

Since 2006, Like.com has been moving the frontiers of eCommerce forward one steap at a time. We were the first to bring visual search to shopping, the first to build an automated cross-matching system for clothing, and more. We didn't stop there, and don't have plans to stop now. We see joining Google as a way to supersize our vision and supercharger our passion. This is something we are truly excited about.

Along the way we built a team that was not just hard working but obsessed with the mission at hand. We are so very proud of this team, and they deserve all the credit for how far we have come. In addition, there are many folks outside the company who have been pivotal to our success. All the Like.com alumni are incredible folks who left our little company better than they found it. Our investors were patient, insightful, and supportive of our plans to build a bigger platform. Our merchant partners were cutting edge and innovative, and in many cases they were willing to try new approaches and new technologies to better the user experience.

Google is obviously impressed with the technology behind Like.com, and it will be interesting to see what they do with it.

Financial details of the acquisition have not been disclosed. TechCrunch says its heard that the price was over $100 million.

Yesterday, news broke that Google was acquiring social Q&A site Aardvark for about $50 million. Aardvark sent its users an email today saying:

Dear friends,

Aardvark has just been acquired by Google!

Aardvark will remain fully operational and completely free, providing quick, helpful answers to all of your questions. For more information about how the acquisition affects Aardvark users, check out the FAQ that we've put together....

"We want social search to reach hundreds of millions people around the world, and joining with Google lets us reach that scale — we’re also excited to work with the team at Google: our company has a culture that was inspired by Google in many ways, and we have a lot of respect for the folks who work there," the company says in a blog post.

Aardvark is already available in Google Labs. Users will keep the same Aardvark account. It will continue to work under Google.

The company says it will continue to keep introducing new features, fixing bugs, and improving speed and quality. They say the main thing that is going to change is that they will be able to move faster with the support of Google.

User questions and answers will show up in search results from Google, Bing, Yahoo and other search results if you choose to share them publicly.

Ask (formerly Ask Jeeves) thinks Google is coming after its business.

AT&T has introduced its FamilyMap App for the iPhone, which allows users to track the location of family members.

Users can download the FamilyMap the App Store on iPhone or at iTunes. Users can track tow phones on an account for $9.99 a month or up to five phone for $14.99 per month. The FamilyMap App can also be used on most other AT&T smartphones. Previously the app was only available via a desktop.

Features of the FamilyMap App include:

- Interactive Map: View whereabouts within an interactive map, including surrounding landmarks such as schools and parks; and, toggle between satellite and interactive street maps.

- Personalize: Assign a name and photo to each device within an account, and label frequently visited locations such as "Bobby's house" and "School."

- Schedule Checks: Use the app to see if a family member is on schedule. Parents can schedule and receive text and email alerts.

- My Places: Set up and view a list of landmarks within the app. Users can display the landmark on the map, edit the landmark's details, and remove or add landmarks.

You may have gotten some good links in the past, but don't count on them helping you forever. Old links go stale in the eyes of Google.

Google's Matt Cutts responded to a user-submitted question asking if Google removes PageRank coming from links on pages that no longer exist.The answer to this question is unsurprisingly yes, but Cutts makes a statement within his response that may not be so obvious to everybody.

"In order to prevent things from becoming stale, we tend to use the current link graph, rather than a link graph of all of time," he says. (Emphasis added)

Link velocity refers to the speed at which new links to a webpage are formed, and by this term we may gain some new and vital insight. Historically, great bursts of new links to a specific page has been considered a red flag, the quickest way to identify a spammer trying to manipulate the results by creating the appearance of user trust. This led to Google’s famous assaults on link farms and paid link directories.

But the Web has changed, become more of a live Web than a static document Web. We have the advent of social bookmarking, embedded videos, links, buttons, and badges, social networks, real-time networks like Twitter and Friendfeed. Certainly the age of a website is still an indication of success and trustworthiness, but in an environment of live, real time updating, the age of a link as well as the slowing velocity of incoming links may be indicators of stale content in a world that values freshness.

So how do you keep getting "fresh" links?

If you want fresh links, there are a number of things you can do. For one, keep putting out content. Write content that has staying power. You can link to your old content when appropriate. Always promote the sharing of your content. Include buttons to make it easy for people to share your content on their social network of choice. You may want to make sure your old content is presented in the same template as your new content so it has the same sharing features. People still may find their way to that old content, and they may want to share it if encouraged.

Go back over old content, and look for stuff that is still relevant. You can update stories with new posts adding a fresher take, linking to the original. Encourage readers to follow the link and read the original article, which they may then link to themselves.

Leave commenting on for ongoing discussion. This can keep an old post relevant. Just because you wrote an article a year ago, does not mean that people will still not add to it, and sometimes people will link to articles based on comments that are left.

Share old posts through social networks if they are still about relevant topics. You don't want to just start flooding your Twitter account with tweets to all of your old content, but if you have an older article that is relevant to a current discussion, you may share it, as your take on the subject. A follower who has not seen it before, or perhaps has forgotten about it, may find it worth linking to themselves. Can you think of other ways to get more link value out of old content?

Google has at some point quietly increased its sitemaps limit from 1,000 to 50,000. In a discussion on a Google Webmasters forum thread back in April of last year, Google employee Jonathan Simon said that each sitemap index file can include 1,000 sitemaps.

Just recently, however, David Harkness posted to that same thread, pointing to official Google documentation for sitemap errors, which says under the "Too many Sitemaps" error:

The list of Sitemaps in your Sitemap index exceeds the maximum allowed. A Sitemap index can contain no more than 50,000 Sitemaps. Split your Sitemap index into multiple Sitemap index files and ensure that each contains no more than 50,000 Sitemaps. Then, resubmit your Sitemap index files individually. The larger number was confirmed by Simon, who came back to the conversation, saying, "Thanks for resurfacing this thread as we've improved our capacity a bit since then. The limit used to be 1,000. The Help Center article you point to is correct. The current maximum number of Sitemaps that can be referenced in a Sitemap Index file is 50,000."

The larger number was confirmed by Simon, who came back to the conversation, saying, "Thanks for resurfacing this thread as we've improved our capacity a bit since then. The limit used to be 1,000. The Help Center article you point to is correct. The current maximum number of Sitemaps that can be referenced in a Sitemap Index file is 50,000."

As Barry Schwartz at Search Engine Roundtable, who stumbled across this post points out, "This is a huge increase in capacity...Still, each Sitemap file can contain up to 50,000 URLs, so technically 50,000 multiplied by 50,000 is 2,500,000,000 or 2.5 billion URLs can be submitted to Google via Sitemaps."

In other words, you can have a lot of sitemaps in one sitemap index file. That's some good information to know, and it is a little surprising that there wasn't a bigger announcement made about this.

RDFa, which stands for Resource Description Framework in attributes, is a W3C recommendation, which adds a set of attribute level extensions to XHTML for embedding rich metadata within web documents. While not everyone believes that W3C standards are incredibly necessary to operate a successful site, some see a great deal of potential for search engine optimization in RDFa.

In fact, this is the topic of a current WebProWorld thread, which was started by Dave Lauretti of MoreStar, who asks, "Are you working the RDFa Framework into your SEO campaigns?" He writes, "Now under certain conditions and with certain search strings on both Google and Yahoo we can find instances where the RDFa framework integrated within a website can enhance their listing in the search results."

Lauretti refers to an article from last summer at A List Apart, by Mark Birbeck who said that Google was beginning to process RDFa and Microformats as it indexes sites, using the parsed data to enhance the display of search results with "rich snippets". This results in the Google results you see like this:

"It's a simple change to the display of search results, yet our experiments have shown that users find the new data valuable -- if they see useful and relevant information from the page, they are more likely to click through," Google said upon the launch of rich snippets.

Google says it is experimenting with markup for business and location data, but that it doesn't currently display this information, unless the business or organization is part of a review (hence the results in the above example). But when review information is marked up in the body of a web page, Google can identify it and may make it available in search results. When review information is shown in search results, this can of course entice users to click through to the page (one of the many reasons to treat customers right and monitor your reputation).

Currently Google uses RDFa for reviews, but this search also displays the date of the review, the star rating, the author and the price range of an iPod, as Lauretti points out.

Best Buy's lead web development engineer reported that by adding RDFa the company saw improved ranking for respective pages. They saw a 30% increase in traffic, and Yahoo evidently observed a 15% increase in click-through rates.(via Steven Pemberton)

Implications for SEO

I'm not going to get into the technical side of RDFa here (see resources listed later in the article), but I would like to get into some of the implications that Google's use of RDFa could have on SEO practices. For one, rich snippets can show specific information related to products that are searched for. For example, a result for a movie search could bring up information like:

- Run time

- Release Date

- Rating

- Theaters that are showing it

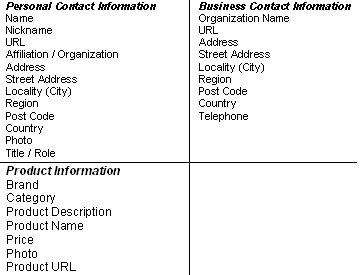

"The implementation of RDFa not only gives more information about products or services but also increases the visibility of these in the latest generations of search engines, recommender systems and other applications," Lauretti tells WebProNews. "If accuracy is an issue when it comes to search and search results then pages with RDFa will get better rankings as there would be little to question regarding the page theme." (Source) He provides the following chart containing examples of the types of data that could potentially be displayed with RDFa:

"It is obvious that search marketers and SEOs will be utilizing this ability for themselves and their clients," says Lauretti. Take contact information specifically. "Using RDFa in your contact information clarifies to the search engine that the text within your contact block of code is indeed contact information." He says in this same light, "people information" can be displayed in the search results (usually social networking info). You could potentially show manufacturer information or author information.

RDFa actually has implications beyond just Google's regular web search. With respect to Google's Image search, the owner of images can also use RDFa to provide license information about the images they own. Google currently allows image searchers to have images displayed based on license type, and using RDFa with your images lets the search bots know under which licenses you are making your images available (Via Mark Birbeck). There is also RDFa support for video.

Google Introduces Rich Snippets

Introduction to RDFa

RDFa Primer

About RDFa (Google Webmaster Central)

RDFa to Provide Image License Info

RDFa Microformat Tagging For Your Website

For Businesses and Organizations

About Review Data (Google Webmaster Central)

Google's Matt Cutts has said in the past that Google has been kind of "white listing" sites to get rich snippets, as Google feels they are appropriate, but as they grow more confident that such snippets don't hurt the user experience, then Google will likely roll the ability out more and more broadly. This is one thing to keep an eye on as the year progresses, and is why those in the WebProWorld thread believe RDFa will become a bigger topic of discussion in 2010.

The first Matt Cutts Answers Questions About Google video of the year has been posted, and in it Matt addresses links from Twitter and Facebook, after talking about his shaved head again. Specifically, the submitted question he answers is:

Links from relevant and important sites have always been a great way to get traffic & acceptance for a website. How do you rate links from new platforms like Twitter, FB to a website?

Essentially, Matt says Google treats links the same whether they are from Facebook or Twitter, as they would if they were from any other site. It's just an extension of the pagerank formula, where its not the amount of links, but how reputable those links are (the company uses a similar strategy for ranking Tweets themselves in real-time search).

"At least in our web search (our organic rankings), we treat links the same from Twitter or Facebook or, you know, pick your favorite platform or website, just like we'd treat links from Wordpress or .edus or.govs or anything like that," says Cutts. "It's not like a link from an .edu automatically carries more weight or a link from a .gov automatically carries more weight. But, the specific platforms might have issues, whether it's not being crawled or it might be nofollow. It would keep those particular links from flowing pagerank."

There you have it. Matt's response probably doesn't come as much of a surprise to most of you, but it's always nice to hear information like this straight from Google.

U.S. sales of video games, which includes portable and console hardware, software and accessories, generated revenues of close to $19.6 billion, an 8 percent decrease over the $21.4 billion generated in 2008, according to The NPD Group.

Retail sales in the PC game software industry also saw declines, with revenues down 23 percent, reaching $538 million in 2009. The total console, portable and PC game software industry hit $10.5 billion, an 11 percent decrease compared to the $11.7 billion generated in 2008.

"December sales broke all industry records and underscores the incredible value consumers find in computer and video games even in a down economy," said Michael D. Gallagher, president and CEO of the Entertainment Software Association, the trade group which represents U.S. computer and video game publishers.

"This is a very strong way to transition into 2010. I anticipate these solid sales numbers to continue upward through 2010 with a pipeline full of highly-anticipated titles."

Portable hardware was a bright spot with a 6 percent increase in revenue in 2009, while the remaining video game categories all saw declines, with the largest decrease coming from console hardware (-13%). Consoles software and portable software both saw declines of 10 percent, while video game accessories saw a 1 percent dip.

"When we started the last decade, video game industry sales, including PC games, totaled $7.98B in 2000," said Anita Frazier, industry analyst, The NPD Group.

"In ten years, the industry has changed dramatically in many ways, but most importantly it was grown over those years by more than 250 percent at retail alone. Considering there are many new sources of revenue including subscriptions and digital distribution, industry growth is even more impressive."

Perpetrators of click fraud are getting sneakier and sneakier. Harvard Business School professor Ben Edelman has uncovered one of the more diabolical click fraud schemes known to be hatched. As he summarizes it:

Here, spyware on a user's PC monitors the user's browsing to determine the user's likely purchase intent. Then the spyware fakes a click on a Google PPC ad promoting the exact merchant the user was already visiting. If the user proceeds to make a purchase -- reasonably likely for a user already intentionally requesting the merchant's site -- the merchant will naturally credit Google for the sale. Furthermore, a standard ad optimization strategy will lead the merchant to increase its Google PPC bid for this keyword on the reasonable (albeit mistaken) view that Google is successfully finding new customers. But in fact Google and its partners are merely taking credit for customers the merchant had already reached by other methods.

Edelman details all of the specifics about his dicovery, pointing to an example perpetrator - Trafficsolar, which he blames InfoSpace for connecting Google to. He also suggests Google discontinue its relationship with InfoSpace and other partners who have their own chains of partners, making everything harder to monitor. In his example, he finds an astounding seven intermediaries in the chain between the click and the Google ad itself. "Furthermore, Google styles its advertising as 'pay per click', promising advertisers that 'You're charged only if someone clicks your ad,'" says Edelman. "But here, the video and packet log clearly confirm that the Google click link was invoked without a user even seeing a Google ad link, not to mention clicking it. Advertisers paying high Google prices deserve high-quality ad placements, not spyware popups and click fraud."

"Furthermore, Google styles its advertising as 'pay per click', promising advertisers that 'You're charged only if someone clicks your ad,'" says Edelman. "But here, the video and packet log clearly confirm that the Google click link was invoked without a user even seeing a Google ad link, not to mention clicking it. Advertisers paying high Google prices deserve high-quality ad placements, not spyware popups and click fraud."

As Andy Greenberg with Forbes points out in an article, which brought Edelman's findings to the forefront of mainstream exposure (and likely to Google's attention), Edelman has a history of criticizing Google, is actually involved with a lawsuit involving misplacement of Google ads, and has served as a consultant to Microsoft, but maintains that this research is not funded by Microsoft or a company involved in that lawsuit. Greenberg reports:

As for its ability to detect the new form of click fraud, Google has long argued that it credits advertisers for as much as 10% of their ad spending based on click fraud that the company detects. While the company wouldn't comment on Edelman's TrafficShare example, a spokesperson wrote that the company uses "hundreds of data points" to detect fraud, not just clicks.

In a report last October, click fraud research firm Click Forensics measured click fraud at around 14%, significantly higher than Google's estimates. But even Click Forensics may not be counting the sort of click fraud Edelman accuses TrafficSolar of committing. Because Click Forensics' data is pulled from advertisers, the company can't necessarily detect click fraud that is disguised as real customers and real sales, according to the company's chief executive, Paul Pellman. Pellman believes, however, that the kind of click fraud Edelman discovered is likely mixed with traditional click fraud to increase the scheme's traffic volume while keeping it hidden.

Click Forensics' own Steve O'Brien says "it was probably a fairly low-volume scheme to begin with. It's limited to machines of users that are infected with spyware who also visit select Google advertisers...It's a problem, but probably not a huge one. What would make it more serious is if there were another version of the spyware that simply clicks on paid links in the background without the user’s knowledge..."

As for Edelman's suggestion that Google sever ties with Infospace and the like, O'Brien doesn't think it is worth going that far. "A better solution would be for Google and InfoSpace to deal only with reputable partners who provide verified, audited clicks to ensure advertisers get what they pay for," says O'Brien.

Though Click Forensics appears to downplay the threat compared to Edelman's own analysis, it shows the increasing sophistication with which fraudsters are carrying out their plots. Good times.

Remember when Google was just a search engine? We often still think about it that way, yet we are frequently reminded of the breadth of product offerings and ultimately the power the company possesses. Power, or energy rather, is actually something Google could end up selling in the future.

Google recently applied for approval from the Federal Energy Regulatory Commission for the right to purchase and monetize energy, just like the utility companies you are already familiar with do. Google has said that its actions had more to do with the enormous amount of energy it consumes itself (consider all of the machinery and equipment it takes to keep a company like Google running at its current pace on a daily basis).

Questions have been raised however, about if Google could actually end up functioning as a utility. From the sounds of it, the company isn't exactly ruling it out. Jeffrey Marlow with the New York Times asked Google's "Green Energy Czar" Bill Weihl if the company views its work on alternative energy as a money-making component. In his response, Weihl noted that some of Google's initiatives come from Google.org (the company's philanthropic arm), and said:

Jeffrey Marlow with the New York Times asked Google's "Green Energy Czar" Bill Weihl if the company views its work on alternative energy as a money-making component. In his response, Weihl noted that some of Google's initiatives come from Google.org (the company's philanthropic arm), and said:

The reason Google.org is not just a foundation is that lots of people believe that if you want to have a big impact at scale on the world, then you need to go beyond what a 501(c)3 can do, which is to make charitable grants, so you need the ability to invest in companies, to do engineering projects, to do things that might at some point actually make money.

We’d be delighted if some of this stuff actually made money, obviously; it is not our goal to not make money. All else being equal, we’d like to make as much money as we can, but the principal goal is to have a big impact for good.

Google says its goal is to make renewable energy cheaper than coal. Coal is said to be the source of about half of the electricity consumed in the US. Google is looking at concentrated solar thermal, enhanced geothermal, and wind energies.

U.S. consumer technology retail sales fell less than one percent for the 2009 holiday season, according to a new report from The NPD Group.

The report found sales for the five-week holiday period reached $10.8 billion, a big improvement from the 6 percent decline during the 2008 holiday season.

The holiday season had its share of fluctuations. Overall revenue declined three out of the five weeks. While the second week of the season posted the largest revenue growth it only represented for 15 percent of overall holiday sales. The final week of the season also saw revenue growth and represented 22 percent of all sales, so the success of the final week was more relevant to the overall success of the season.

"The dynamics of the holiday season changed this year; the holiday season started before Black Friday as retailers ran Black Friday-like sales throughout November," said Stephen Baker, vice president of industry analysis at NPD.

"That move may have lessened the Black Friday hype for consumers, but the increase during the final week of the season is a sign that consumers either went back out or waited it out to get the best deal."

PCs and flash-based camcorders were popular this holiday leading the way in unit growth among large categories, but total sales numbers were dependent on the success of PCs and flat-panel TVs. Combined they accounted for 41 percent of the revenue over the five week holiday period, up from 39 percent in 2008 and 34 percent in 2007.

Despite the high revenue, flat-panel TVs registered a decline in dollars of 13 percent, in line with their performance during most of 2009. That decline pulled down the industry overall. MP3 players, for the third year in a row, were the largest unit volume category despite increased ASPs and declining unit volumes.

"Just cutting prices this year was not enough to guarantee successful sales results," said Baker. Flat-panel TVs had a disappointing holiday because there wasn't enough price-cutting on the right items, while notebook PCs and camcorders offered new form factors and price points that drove enormous increases in units and revenue despite falling prices."

Make Money Now!!

If you wish to no longer receive emails, please click here.

Or write to: Suite 208 – 260 West Esplanade North Vancouver, B.C. V7M3G7 Canada